10.0+41.10 Artificial Intelligence

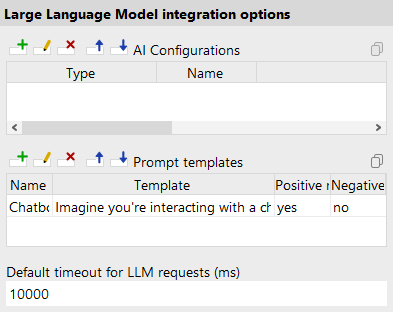

The following options serve to integrate LLMs ("Large Language Models") with QF-Test.

- LLM Configurations (System)

-

Server script name:

OPT_LLM_PROVIDERS

In this table you can make third-party LLMs usable in QF-Test, for example to use them with the Check text with AI or

ai.ask()(see The ai module). Each line represents a specific language model.The following table columns are available:

- Type

- The name of the API provider or type. If the API type you need is not yet available, please get in touch with our support team.

- Name

- The name of this configuration. Used to reference it in other places.

- URL

-

The URL at which the LLM can be accessed.

Please get this information from the documentation of the LLM provider.

Usually ends with

/v1or similar. - API key

- The API key serves to authenticate with the respective LLM provider. Get it from your LLM provider.

- Model name

- Many providers offer multiple LLM models like "gpt-4o" or "gemini-2.0-flash". You can learn about the actually available model names from your LLM provider.

- Prompt templates (System)

-

Server script name:

OPT_LLM_PROMPT_TEMPLATES

In this table you can configure templates for LLM requests, to use with Check text with AI, for example. Each line represents a specific template.

The following table columns are available:

- Name

- The name of this template with which it can be referenced elsewhere.

- Template

-

The text content of the template which would be sent to an LLM. The following placeholders are available:

-

{{context}}: Additional application-specific information, see Check context. -

{{goodCompletions}}: A list of example results which should be interpreted as positive. -

{{badCompletions}}: A list of example results which should be interpreted as negative. -

{{completion}}: The actual result, e.g. from a Check text with AI. -

{{positiveResponse}}: The string configured in "Positive response", to teach the model how to reply. -

{{negativeResponse}}: The string configured in "Negative response", to teach the model how to reply.

-

- Positive response

- The response text that should be considered positive, e.g. "yes". Case-insensitive.

- Negative response

- The response text that should be considered negative, e.g. "no". Case-insensitive.

- Default timeout for LLM requests (ms) (System)

-

Server script name:

OPT_LLM_REQUEST_TIMEOUT

The default timeout for LLM requests in milliseconds. If a request, like in the context of a Check text with AI, takes longer than the configured duration, an exception is thrown.

- Disable SSL verification for LLM requests (System)

-

Server script name:

OPT_LLM_REQUEST_DISABLE_SSL_VERIFICATION

In case SSL issues occur during communication with an LLM, you can use this option to disable SSL verification for all network communication with external LLM models.